Shiro Kumano, PhD. |

Japanese version |

||

This page is no longer updated. Please check out the new website. |

|||

| 2017-Current |

Senior Research Scientist

Human Information Science Laboratory, NTT Communication Science Laboratories |

||

| 2016-2017 |

Honorary Research Associate

Institute of Cognitive Neuroscience, University College London (UCL) |

|

|

| 2009-2017 |

Research Scientist

Media Information Laboratory, NTT Communication Science Laboratories |

||

| 2007-2009 |

Internship trainee Media Information Laboratory, NTT Communication Science Laboratories |

||

| 2006-2009 |

Doctral course student Sato Laboratory, Graduate School of Information Science and Technology, The University of Tokyo |

||

|

E-mail: shirou.______.yc(at)hco.ntt.co.jp (______: my family name in small letters) |

|||

Research Interests

|

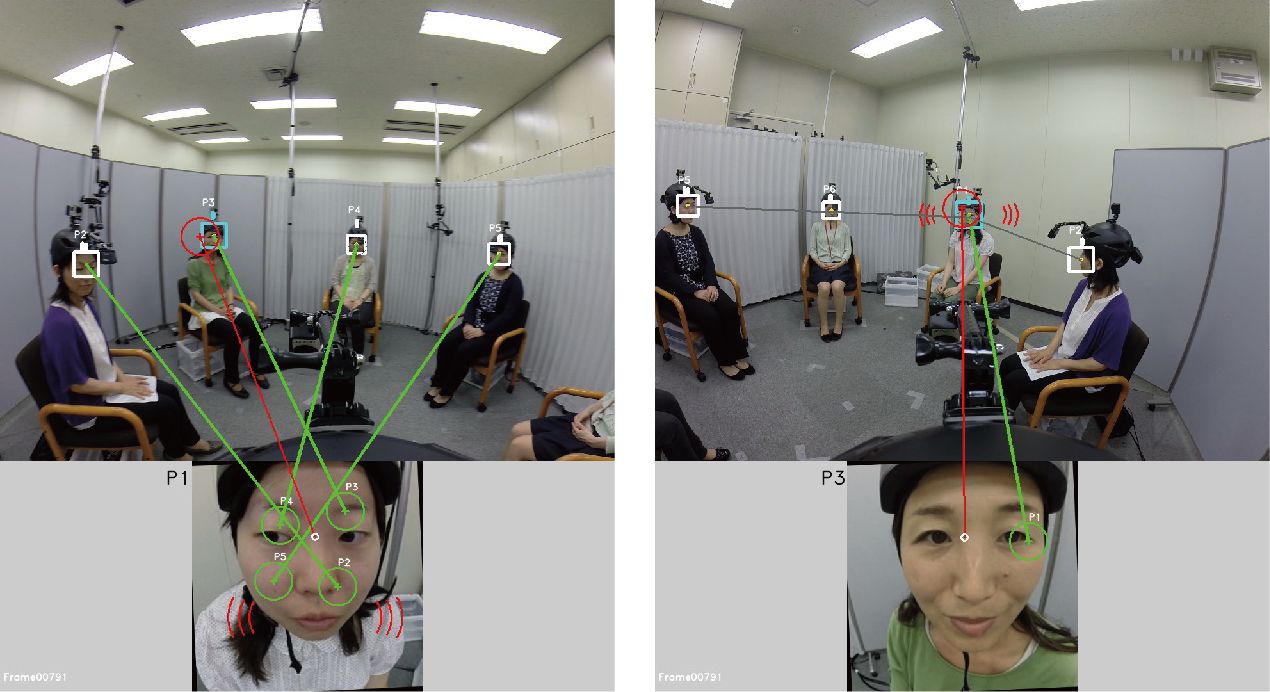

Gaze Analysis in Conversation Using Wearable Cameras |

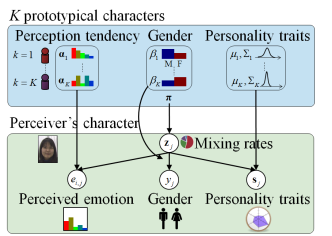

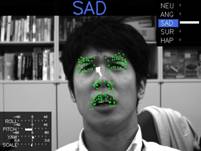

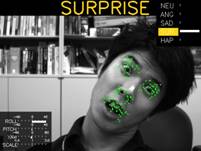

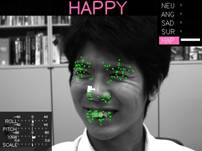

Emotion reader Modeling | Perceived Empathy Understanding | ||

|

|

|

||

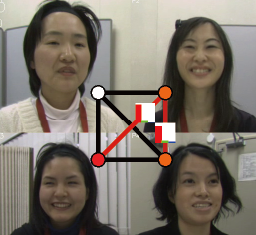

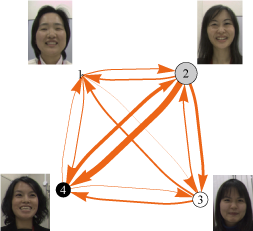

| Interpersonal Emotion Estimation | ||||

|

|

Awards

Outstanding Paper Award, Int'l Conf. Multimodal Interaction (ICMI 2014)

Honorable Mention Award, Asian Conference on Computer Vision (ACCV 2007)

Interactive Presentation Award, Human Communication Group Symposium 2012, 2015 x 2 (domestic conference)

Organized Session Award, Human Communication Group Symposium 2015 (domestic conference)

Human Communication Award, IEICE Human Communication Group, 2014 (domestic conference)

Interactive Session Award, Meeting on Image Recognition and Understanding (MIRU) 2011 (domestic conference)

Talks

Lecture

[1] Shiro Kumano, "Computational Model for Inferring How an Individual Perceives Others' Affective States," Lectures, Swiss Center for Affective Sciences, 2017.

Publications

Journal papers

[25] Shiro Kumano, Antonia Hamilton and Bahador Bahrami, "The role of anticipated regret in choosing for others", Scientific Reports, 11, Article No. 12557, 2021.

[24] Aiko Murata, Keishi Nomura, Junji Watanabe and Shiro Kumano, "Interpersonal physiological synchrony is associated with first person and third person subjective assessments of excitement during cooperative joint tasks", Scientific Reports, 11, Article No. 12543, 2021.

[23] Takayuki Ogasawara, Hanako Fukamachi, Kenryu Aoyagi, Shiro Kumano, Hiroyoshi Togo and Koichiro Oka, "Archery Skill Assessment Using an Acceleration Sensor" IEEE Trans. Human-Machine Systems (THMS), 2021 (accepted, early access).

[22] Ryo Ishii, Kazuhiro Otsuka, Shiro KumanoCRyuichiro Higashinaka and Yushi AonoC "Estimation of Personal Empathy Skill Level Using Dialogue Act and Eye-gaze during Turn-keeping/changing", IPSJ JournalCVol. 62, No. 1, pp. 100-114, 2021. (in Japanese)

[21] Layla Chadaporn Antaket, Aiko Murata and Shiro Kumano, "Cognitive Model of Emotional Similarity Judgment Based on Individiaul Differences Scaling," IEICE Trans. on Information and System, Vol. J103-D, No.03, pp. 102-110, Mar. 2020. (in Japanese)

[20]

Ryo Ishii, Kazuhiro Otsuka, Shiro Kumano, Ryuichiro Higashinaka, and Junji Tomita,

"Prediction of Who Will Be Next Speaker and When Using Mouth-Opening Pattern in Multi-Party Conversation,"

Multimodal Technologies Interact.,

No. 3, Vol. 4, 2019.

[19]

Ryo Ishii, Shiro Kumano, Kazuhiro Otsuka,

"Estimating Empathy Skill Level using Gaze Behavior depending on Participant-role during Turn-changing/keeping,"

Transactions of Human Interface Society, No. 20, Vol. 4, pp. 447-456, 2018. (in Japanese)

[18]

Li Li, Shiro Kumano (equally contributed first author), Anita Keshmirian, Bahador Bahrami, Jian Li and Nicholas D. Wright,

"Parsing cultural impacts on regret and risk in Iran, China and the United Kingdom",

Scientific Reports, Vol. 8, 13862, 2018.

[17]

Layla Chadaporn Antaket, Masafumi Matsuda, Kazuhiro Otsuka and Shiro Kumano,

"Analyzing Generation and Cognition of Emotional Congruence using Empathizing-Systemizing Quotient",

International Journal of Affective Engineering,

Vol.17 No.3, pp. 183-192, 2018.

[open access from publisher's site]

[16]

Shiro Kumano, Kazuhiro Otsuka, Ryo Ishii, and Junji Yamato,

"Collective First-Person Vision for Automatic Gaze Analysis in Multiparty Conversations,"

IEEE Trans. Multimedia,

Vol. 19, Issue 1, pp. 107-122, 2017.

[pdf]

[15]

Ryo Ishii, Kazuhiro Otsuka, Shiro Kumano, Junji Yamato,

"Using Respiration of Who Will be the Next Speaker and When in Multiparty Meetings",

ACM Trans. Interactive Intelligent Systems (TiiS),

Vol. 6, Issue 2, Article No. 20, 2016.

[14]

Ryo Ishii, Kazuhiro Otsuka, Shiro Kumano, Junji Yamato,

"Prediction of Who Will be the Next Speaker and When using Gaze Behavior in Multi-Party Meetings",

ACM Trans. Interactive Intelligent Systems (TiiS),

Vol. 6, Issue 1, No. 4, 2016.

[13]

Ryo Ishii, Kazuhiro Otsuka, Shiro Kumano, Junji Yamato,

"Predicting Next Speaker Based on Head Movement in Multi-Party Meetings"

IPSJ Journal,

Vol. 57, No. 4, pp. 1116-1127, 2016D

[12]

Dairazalia Sanchez-Cortes, Shiro Kumano, Kazuhiro Otsuka, Daniel Gatica-Perez,

"In the Mood for Vlog: Multimodal Inference in Conversational Social Video,"

ACM Trans. Interactive Intelligent Systems (TiiS), Vol. 5, No. 2, 9:1-24, 2015.

[11] Shiro Kumano, Kazuhiro Otsuka, Dan Mikami, Masafumi Matsuda, and Junji Yamato,

"Analyzing Interpersonal Empathy via Collective Impressions,"

IEEE Trans. Affective Computing,

Vol. 6, Issue 4, pp. 324-336, 2015.

[pdf]

[10] Shiro Kumano, Kazuhiro Otsuka, Masafumi Matsuda, and Junji Yamato,

"Analyzing Perceived Empathy Based on Reaction Time in Behavioral Mimicry,"

IEICE Trans. on Information and System, Vol. E97-D, No. 8, pp. 2008-2020, 2014.

[pdf]

[9] Ryo Ishii, Kazuhiro Otsuka, Shiro Kumano, and Junji Yamato,

"Predicting Next Speaker and Timing from Gaze Transition Patterns in Multi-Party Meetings,"

IEICE Trans., Vol. J97-A, No. 6, 2014. (in Japanese)

[8] Shiro Kumano, Kazuhiro Otsuka, Junji Yamato, Eisaku Maeda and Yoichi Sato, "Pose-Invariant Facial Expression Recognition Using Variable-Intensity

Templates,"

International Journal of Computer Vision,

Vol. 83, No. 2, pp.178-194, 2009.

[pdf]

[7] Masafumi Matsuda, Kaito Yaegashi, Ikuo Daibo, Shiro Kumano, Kazuhiro Otsuka, and Junji Yamato, "An exploratory study of proxemics and impression formation among video communication users", The Transactions of Human Interface Society, Vol. 15, No. 4, pp. 433-442, 2013. (In Japanese)

[6] Kazuhiro Otsuka, Shiro Kumano, Masafumi Matsuda, and Junji Yamato, "MM-Space: Re-creating Multiparty Conversation Space based on Physically Augmented Head Motion by Dynamic Projection" IPSJ Trans., Vol. 54, No. 4, pp. 1450-1461, 2013. (In Japanese)

[5] Dan Mikami, Kazuhiro Otsuka, Shiro Kumano, and Junji Yamato, "Enhancing Memory-based Particle Filter with Detection-based Memory Acquisition for Robustness under Severe Occlusion," IEICE Trans. on Information and System, Vol. E95-D, No.11, pp. 2693-2703, 2012.

[4] Shiro Kumano, Kazuhiro Otsuka, Junji Yamato, Eisaku Maeda, and Yoichi Sato, "Simultaneous Estimation of Facial Pose and Expression by Combining Particle Filter with Gradient Method", IEICE Trans. on Information and Systems, Vol. J92-D, No.8, pp.1349-1362, 2009. (In Japanese)

[3] Shiro Kumano, Kazuhiro Otsuka, Junji Yamato, Eisaku Maeda, and Yoichi Sato, "Simultaneous Estimation of Facial Pose and Expression Using Variable-intensity Template", IPSJ Trans. on Computer Vision and Image Media, Vol. 1, No. 2, pp.50-60, 2008. (In Japanese)

[2] Shiro Kumano, Kazuhiro Otsuka, Junji Yamato, Eisaku Maeda, and Yoichi Sato, "Stochastical Facial Expression Recognition Method Using Variable-Intensity Template for Handling Head Pose Variation", Information Technology Letters, pp. 215-218, 2007. (In Japanese)

[1] Shiro Kumano, Yozo Fujino, Masato Abe, Junji Yoshida, and Takuya Matsusaki, "A Measurement System for Understanding Dynamic Behavior of Membranes by Image Analysis", Research Report on Membrane Structures, No. 17, pp.7-13, 2003. (In Japanese)

Conference papers

[38] Yan Zhou, Tsukasa Ishigaki and Shiro Kumano, "Deep Explanatory Polytomous Item-Response Model for Predicting Idiosyncratic Affective Ratings", in Proc. International Conference on Affective Computing & Intelligent Interaction (ACII 2021), 2021. (Oral)

[37] Hiroyuki Ishihara and Shiro Kumano, "Gravity-Direction-Aware Joint Inter-Device Matching and Temporal Alignment between Camera and Wearable Sensors", in Proc. ICMI '20 Companion, 2020.

[36] Aiko Murata, Shiro Kumano and Junji Watanabe, "Interpersonal physiological linkage is related to excitement during a joint task", in Proc. Annual Meeting of the Cognitive Science Society (CogSci 2020), 2020.

[35] Shiro Kumano, Keishi Nomura, "Multitask Item Response Models for Response Bias Removal from Affective Ratings," in Proc. International Conference on Affective Computing & Intelligent Interaction (ACII 2019), 2019. (Oral) (Please access to the texts of this paper from the link here, since we found they cannot be read on IEEE Xplore-published version for any reasons.)

[34] Keishi Nomura, Shiro Kumano, Yuko Yotsumoto, "Multitask Item Response Model Revealed Bias in Estimated Emotional Features due to Response Style within the Open Affective Standardized Image Set (OASIS)", in Proc. The 52nd Annual Meeting of the Society for Mathematical Psychology (MathPsych 2019), 2019.

[33] Keishi Nomura, Aiko Murata, Yuko Yotsumoto, Shiro Kumano, "Bayesian Item Response Model with Condition-specific Parameters for Evaluating the Differential Effects of Perspective-taking on Emotional Sharing," in Proc. Annual Meeting of the Cognitive Science Society (CogSci 2019), 2019.

[32] M. Perusquia-Hernandez, S. Ayabe-Kanamura, K. Suzuki, S. Kumano, "The Invisible Potential of Facial Elec-tromyography: a Comparison of EMG and Computer Vision when Distinguishing Posed from Spontaneous Smiles," in Proc. CHI Conf. Human Factors in Computing Systems (CHI 2019), Paper No. 149, 2019. (Full paper)

[31] Ryo Ishii, Kazuhiro Otsuka, Shiro Kumano, Ryuichiro Higashinaka, and Junji Tomita, "Analyzing Gaze Behavior and Dialogue Act during Turn-taking for Estimating Empathy Skill Level," in Proc. ACM Int'l Conf. Multimodal Interaction (ICMI), 2018.

[30] Ryo Ishii, Shiro Kumano, Kazuhiro Otsuka, "Analyzing Gaze Behavior during Turn-taking for Estimating Empathy Skill Level," In Proc. ACM Int'l Conf. Multimodal Interaction (ICMI), 2017.

[29] Shiro Kumano, Ryo Ishii, Kazuhiro Otsuka, "Computational Model of Idiosyncratic Perception of Others' Emotions," In Proc. Int'l Conf. Affective Computing and Intelligent Interaction (ACII 2017), pp. 42-49, 2017. [pdf]

[28] Shiro Kumano, Ryo Ishii, Kazuhiro Otsuka, "Comparing Empathy Perceived by Interlocutors in Multiparty Conversation and External Observers," In Proc. Int'l Conf. Affective Computing and Intelligent Interaction (ACII 2017), pp. 50-57, 2017.

[27] Ryo Ishii, Shiro Kumano, Kazuhiro Otsuka, "Prediction of Next-Utterance Timing using Head Movement in Multi-Party Meetings," In Proc. Int'l Conf. Human-Agent Interaction (HAI2017).

[26] Ryo Ishii, Shiro Kumano, Kazuhiro Otsuka, "Analyzing Mouth-Opening Transition Pattern for Predicting Next Speaker in Multi-party Meetings", In Proc. ACM Int'l Conf. Multimodal Interaction (ICMI), 2016.

[25] Ryo Ishii, Shiro Kumano, Kazuhiro Otsuka, "Multimodal Fusion using Respiration and Gaze for Predicting Next Speaker in Multi-party Meetings", In Proc. ACM Int'l Conf. Multimodal Interaction (ICMI), pp. 99-106, 2015.

[24] Shiro Kumano, Kazuhiro Otsuka, Ryo Ishii, Junji Yamato, "Automatic Gaze Analysis in Multiparty Conversations based on Collective First-Person Vision," In Proc. International Workshop on Emotion Representation, Analysis and Synthesis in Continuous Time and Space (EmoSPACE). pp. 1-8, vol. 5, 2015. [pdf]

[23] Ryo Ishii, Shiro Kumano, Kazuhiro Otsuka,

"Predicting Next Speaker using Head Movement in Multi-party Meetings",

In Proc. IEEE Int'l Conf. Acoustics, Speech, and Signal Processing (ICASSP),

pp. 2319-2323, 2015.

[22]

Ryo Ishii, Kazuhiro Otsuka, Shiro Kumano, Junji Yamato,

"Analysis of Respiration for Prediction of Who Will Be Next Speaker and When? in Multi-Party Meetings,"

In Proc. Int'l Conf. Multimodal Interaction (ICMI), 2014.

[Outstanding Paper Award]

[21]

Ryo Ishii, Kazuhiro Otsuka, Shiro Kumano, Junji Yamato,

"Analysis of Timing Structure of Eye Contact in Turn-changing,"

In Proc. ACM Workshop on Eye Gaze in Intelligent Human Machine Interaction, 2014.

[20]

Ryo Ishii, Kazuhiro Otsuka, Shiro Kumano, Junji Yamato,

"Analysis and Modeling of Next Speaking Start Timing based on Gaze Behavior in Multi-party Meetings",

In Proc. IEEE Int'l Conf. on Acoustics, Speech, and Signal Processing (ICASSP 2014), 2014.

[19]

Kazuhiro Otsuka, Shiro Kumano, Ryo Ishii, Maja Zbogar, Junji Yamato,

"MM+Space: n x 4 Degree-of-Freedom Kinetic Display for Recreating Multiparty Conversation Spaces", In Proc. International Conference on Multimodal Interaction (ICMI 2013), 2013.

[18]

Ryo Ishii, Kazuhiro Otsuka, Shiro Kumano, Masafumi Matsuda, Junji Yamato,

"Predicting Next Speaker and Timing from Gaze Transition Patterns in Multi-Party Meetings",

In Proc. International Conference on Multimodal Interaction (ICMI 2013), 2013.

[17]

Dairazalia Sanchez-Cortes, Joan-Isaac Biel, Shiro Kumano, Junji Yamato, Kazuhiro Otsuka and Daniel Gatica-Perez,

"Inferring Mood in Ubiquitous Conversational Video",

In Proc. Int'l Conf. on Mobile and Ubiquitous Multimedia (MUM2013), 2013.

[16]

S. Kumano, K. Otsuka, M. Matsuda, R. Ishii, J. Yamato,

"Using A Probabilistic Topic Model to Link Observers' Perception Tendency to Personality",

In Proc. Humaine Association Conference on Affective Computing and

Intelligent Interaction (ACII), pp. 588-593, 2013.

[pdf]

[15]

S. Kumano, K. Otsuka, M. Matsuda, J. Yamato,

"Analyzing perceived empathy/antipathy based on reaction time in behavioral coordination",

In Proc. International Workshop on Emotion Representation, Analysis and Synthesis in Continuous Time and Space (EmoSPACE), 2013.

[pdf]

[14]

S. Kumano, K. Otsuka, D. Mikami, M. Matsuda and J. Yamato,

"Understanding Communicative Emotions from Collective External Observations",

In Proc. CHI '12 extended abstracts on Human factors in computing systems (CHI2012),

pp. 2201-2206, 2012.

[pdf]

[13]

Kazuhiro Otsuka, Shiro Kumano, Dan Mikami, Masafumi Matsuda, and Junji Yamato, gReconstructing Multiparty Conversation Field by Augmenting Human Head Motions via Dynamic Displays", In Proc. CHI '12 extended abstracts on Human factors in computing systems (CHI' 12), 2012.

[12]

Dan Mikami, Kazuhiro Otsuka, Shiro Kumano, and Junji Yamato, Enhancing Memory-based Particle Filter with Detection-based Memory Acquisition for Robustness under Severe Occlusions,

In Proc. VISAPP2012, 2012.

[11]

Kazuhiro Otsuka, Kamil Sebastian Mucha, Shiro Kumano, Dan Mikami,,Masafumi Matsuda, and Junji Yamato, "A System for Reconstructing Multiparty Conversation Field based on Augmented Head Motion by Dynamic Projection",

In Proc. ACM Multimedia 2011, 2011.

[10] L. Su, S. Kumano, K. Otsuka, D. Mikami, J. Yamato, and Y. Sato,

"Early Facial Expression Recognition with High-frame Rate 3D Sensing",

In Proc. IEEE Int'l Conf. Systems, Man, and Cybernetics (SMC 2011),

Oct., 2011.

[9] Takashi Ichihara, Shiro Kumano, Daisuke Yamaguchi, Yoichi Sato, Yoshihiro Suda, Li Shuguang,

"Evaluation of eco-driving skill using traffic signals status information",

In Proc. ITS World Congress (ITSWC2010), 2010.

[8] Yoshihiro Suda, Katsushi Ikeuchi, Yoichi Sato, Daisuke Yamaguchi, Shintaro Ono, Kenichi Horiguchi, Shiro Kumano, Ayumi Han, Makoto Tamaki,

"Study on Driving Safety Using Driving Simulator",

In Proc. International Symposium on Future Active Safety Technology (FAST-zero'11), 2011.

[7]

S. Kumano, K. Otsuka, D. Mikami and J. Yamato,

"Analyzing Empathetic Interactions based on the Probabilistic Modeling of the Co-occurrence Patterns of Facial Expressions in Group Meetings",

In Proc. IEEE International Conference on Automatic Face and Gesture Recognition (FG 2011),

pp. 43-50, 2011.

[pdf]

[6] Takashi Ichihara, Shiro Kumano, Daisuke Yamaguchi, Yoichi Sato and Yoshihiro Suda,

"Driver assistance system for eco-driving",

In Proc. ITS World Congress 2009, 2009.

[5] Shiro Kumano, Kenichi Horiguchi, Daisuke Yamaguchi, Yoichi Sato, Yoshihiro Suda and Takahiro Suzuki,

"Distinguishing driver intentions in visual distractions",

In Proc. ITS World Congress 2009, 2009.

[4]

S. Kumano, K. Otsuka, D. Mikami and J. Yamato,

"Recognizing Communicative Facial Expressions for Discovering Interpersonal Emotions in Group Meetings",

In Proc. International Conference on Multimodal Interfaces (ICMI),

Sept. 2009.

[pdf],

[poster]

[3]

S. Kumano, K. Otsuka, J. Yamato, E. Maeda and Y. Sato, "Combining Stochastic

and Deterministic Search for Pose-Invariant Facial Expression Recognition",

In Proc. British Machine Vision Conference (BMVC), 2008.

[2]

S. Kumano, K. Otsuka, J. Yamato, E. Maeda and Y. Sato, "Pose-Invariant Facial

Expression Recognition Using Variable-Intensity Templates",

In Proc. Asian Conference on Computer Vision (ACCV), Vol. I, pp.324-334, 2007.

[Honorable mention Award]

[1] Junji Yoshida, Masato Abe, Shiro Kumano, and Yozo Fujino,

"Construction of A Measurement System for the Dynamic Behaviors of Membrane by Using Image Processing",

In Proc. Structural Membrane 2003 - International Conference on Textile Composites and Inflatable Structures, 2003.

Activities

Int'l Conf. Affective Computing and Intelligent Interaction (ACII 2019), Organizing Committee Member

IPSJ SIG of Computer Vision and Image Media (April 2009- March 2013), Steering Committee Member

IAPR Int'l Conf. Machine Vision Applications (MVA 2013-2015), Organizing Committee Member

IEEE Trans. Affective Computing (TAC), Reviewer

IEEE Trans. Circuits and Systems for Video Technology (TCSVT), Reviewer

Machine Vision and Applications, Reviewer

IEICE Trans, Reviewer

Int'l Conf. Affective Computing and Intelligent Interaction (ACII 2017), Senior Program Committee Member

IEEE Conf. Computer Vision and Pattern Recognition (CVPR 2013-), Program Committee Member

IEEE Int'l Conf. Computer Vision (ICCV 2013-), Program Committee Member

European Conf. Computer Vision (ECCV 2016-), Program Committee Member

Asian Conf. Computer Vision (ACCV 2014-), Program Committee Member

British Machine Vision Conference (BMVC 2017-), Program Committee Member

ACM Int'l Conf. Multimedia (ACM MM 2014), Program Committee Member

IEEE Int'l Conf. Automatic Face and Gesture Recognition (FG 2011-), Program Committee Member

Int'l Conf. Pattern Recognition (ICPR 2010-), Reviewer

IEEE Int'l Conf. Acoustics, Speech, and Signal Processing (ICASSP 2014), Reviewer

Int'l Audio/Visual Mapping Personality Traits Challenge & Workshop (MAPTRAITS'14), Technical Program Committee Member

The first Int'l Workshop Affective Analysis in Multimedia (AAM 2013), Technical Program Committee Member

Int'l Conf. Informatics, Electronics & Vision 2012 (ICIEV 2012-14), International Program Committee Member

The 15th Int'l Multimedia Modeling Conference (MMM 2009), Reviewer